|

Email / CV / LinkedIn / Google Scholar / X (Twitter) / GitHub |

|

|

I am a research scientist at NVIDIA. I work on deep generative models for sequences, with a particular focus on speech and audio. I received my Ph.D. from the Data Science & AI Lab (DSAIL) at Seoul National University. During my Ph.D., I served as a research intern at NVIDIA, under the advisement of Wei Ping and Boris Ginsburg. Prior to that, I completed internships at Microsoft Research Asia, where I was advised by Xu Tan, Tao Qin (speech), and Bin Shao (bioinformatics). I received my B.S. in Electrical and Computer Engineering from Seoul National University. |

|

|

My research interest spans a wide range of deep generative models (AR, flow, GAN, diffusion,

etc.) applied to sequential data. Specifically, I am working on building multi-modal large language models

with a focus on audio. |

|

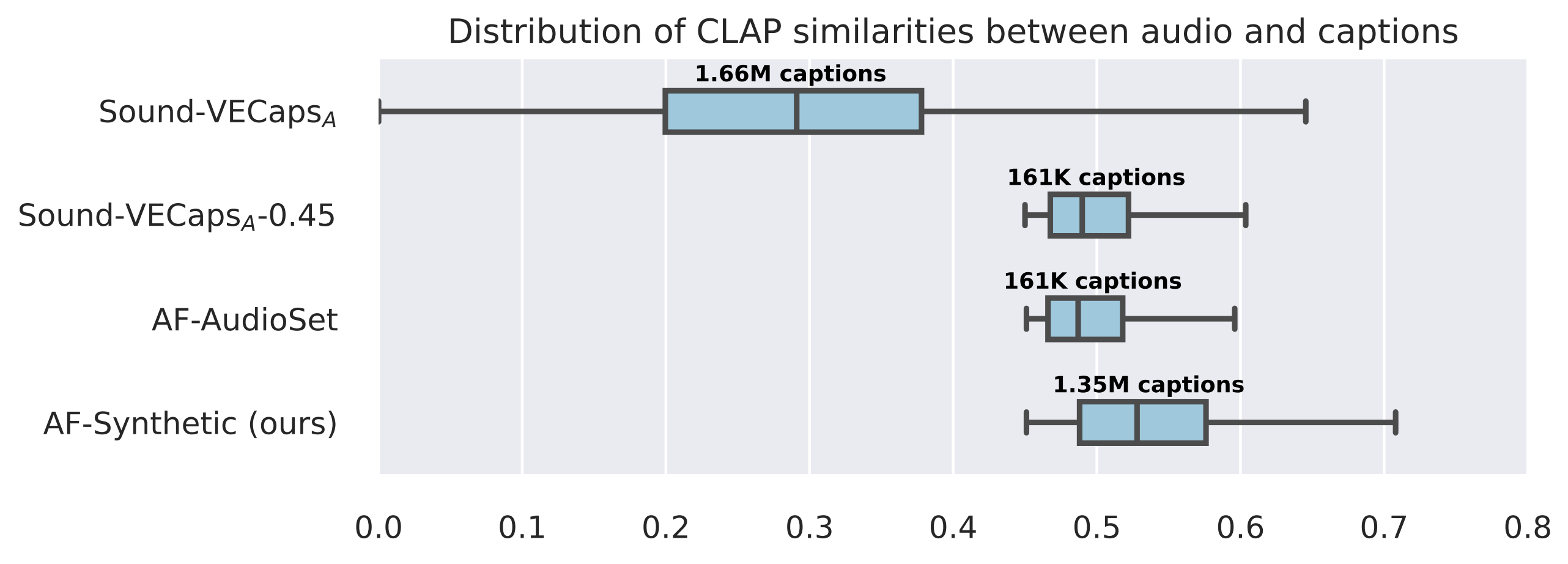

Sang-gil Lee*, Zhifeng Kong*, Arushi Goel, Sungwon Kim, Rafael Valle, Bryan Catanzaro arXiv preprint, 2024 Project Page / arXiv ETTA is the first text-to-audio model with emergent abilities, capable of synthesizing entirely novel, imaginative sounds beyond the real world by leveraging large-scale synthetic audio captions (AF-Synthetic). |

|

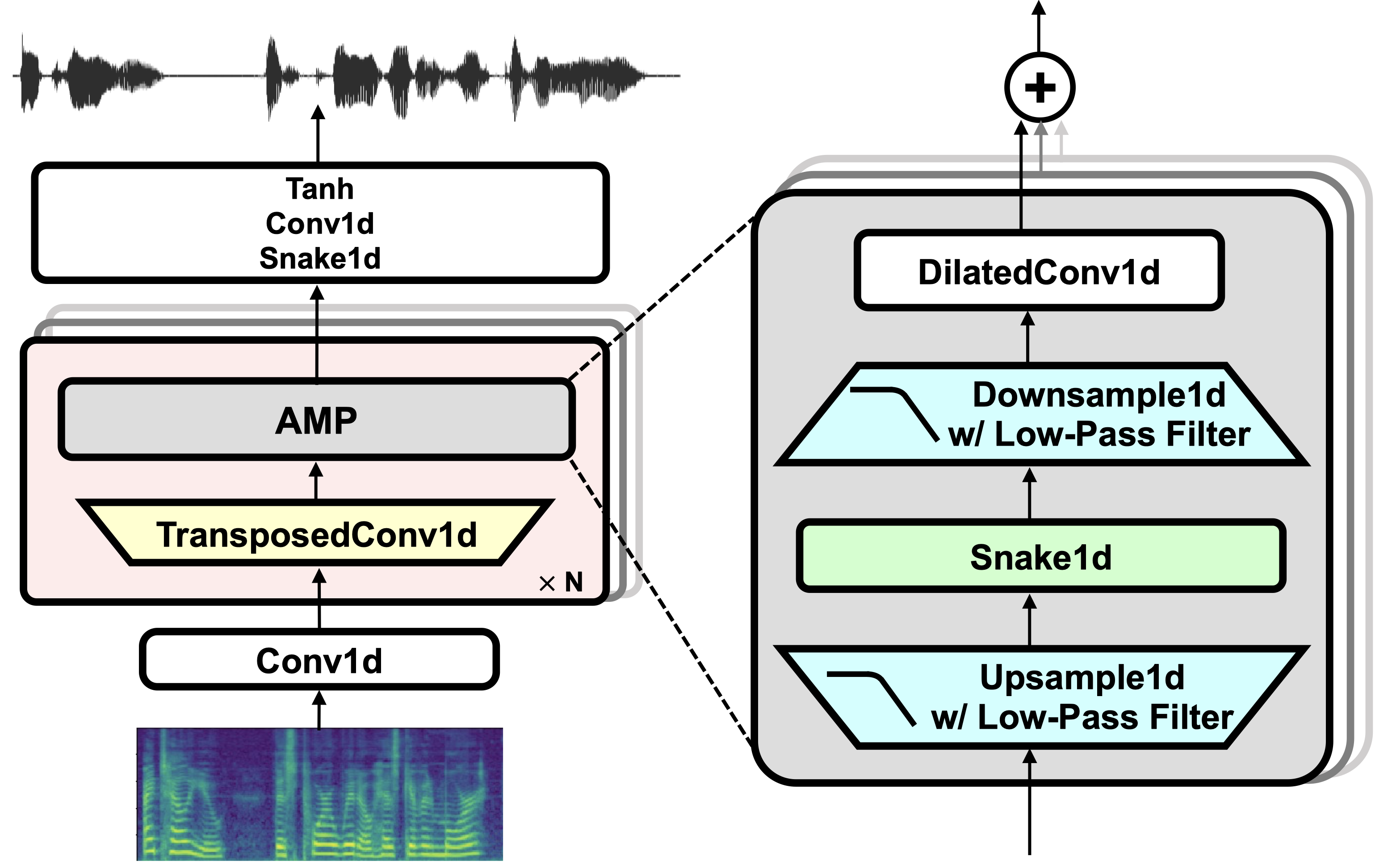

Sang-gil Lee, Wei Ping, Boris Ginsburg, Bryan Catanzaro, Sungroh Yoon International Conference on Learning Representations (ICLR), 2023 Project Page / Model / arXiv / Code / Demo BigVGAN is a universal audio synthesizer that achieves unprecedented zero-shot performance on various unseen environments using anti-aliased periodic nonlinearity and large-scale training. |

|

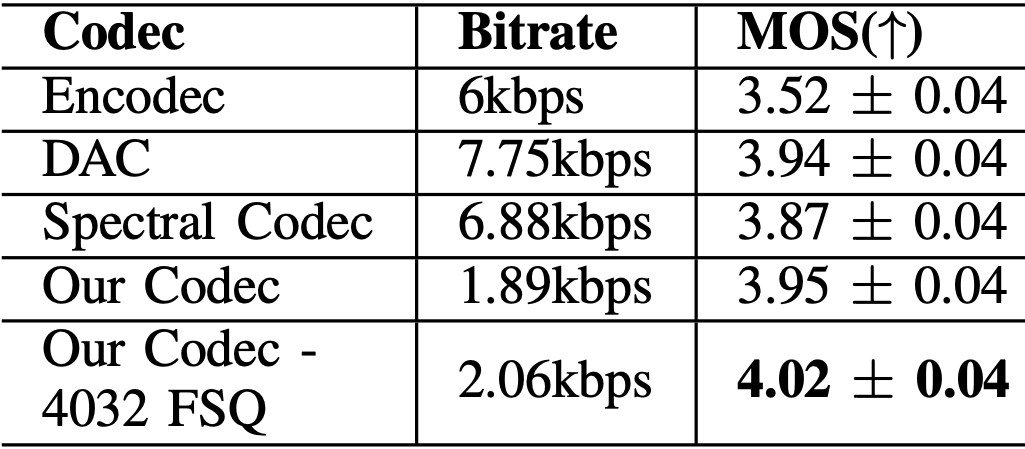

Edresson Casanova, Ryan Langman, Paarth Neekhara, Shehzeen Hussain, Jason Li, Subhankar Ghosh, Ante Jukić, Sang-gil Lee ICASSP, 2025 Project Page / Model / arXiv / Code A neural audio codec that leverages finite scalar quantization and adversarial training with large speech language models to achieve high-quality audio compression with a 1.89 kbps bitrate and 21.5 frames per second. |

|

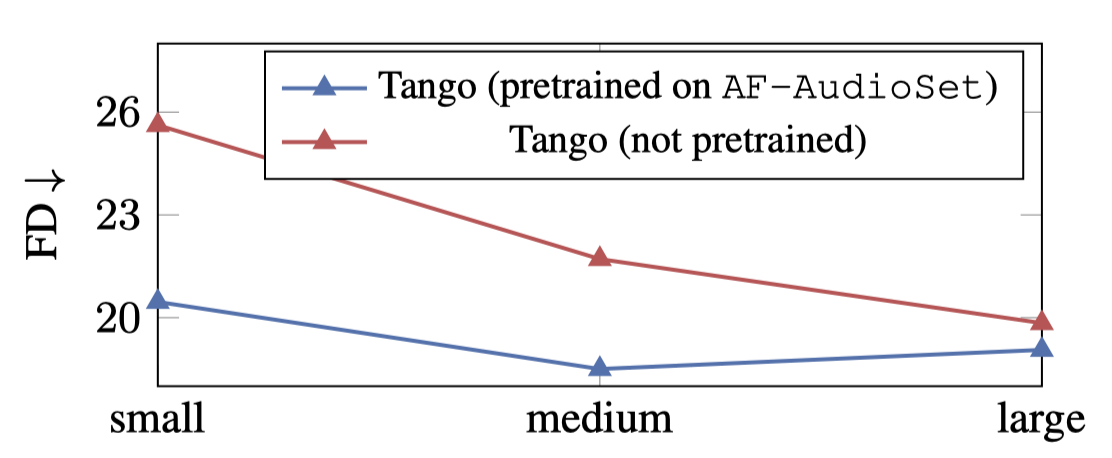

Zhifeng Kong*, Sang-gil Lee*, Deepanway Ghosal, Navonil Majumder, Ambuj Mehrish, Rafael Valle, Soujanya Poria, Bryan Catanzaro Interspeech SynData4GenAI, 2024 Dataset / Model / arXiv AF-AudioSet is a large-scale audio dataset featuring synthetic captions generated by Audio Flamingo, enabling significant improvements in text-to-audio models. |

|

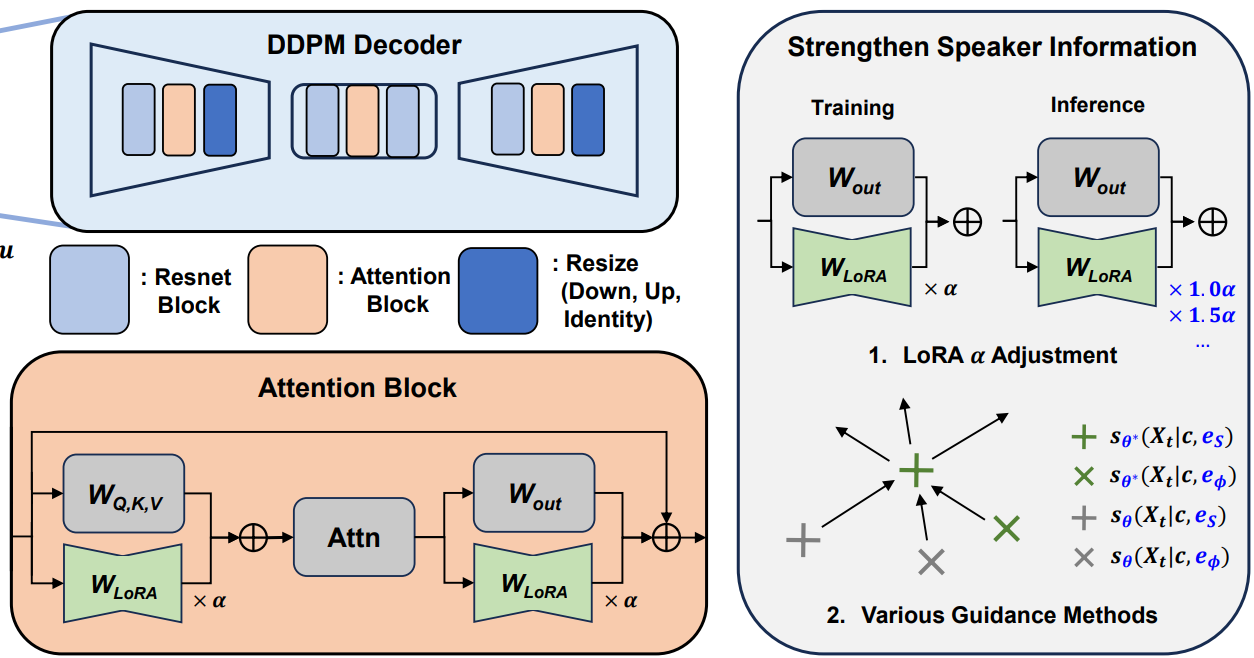

Heeseung Kim, Sang-gil Lee, Jiheum Yeom, Che Hyun Lee, Sungwon Kim, Sungroh Yoon INTERSPEECH, 2024 Project Page / arXiv VoiceTailor is a one-shot speaker-adaptive text-to-speech model, which proposes combining low-rank adapters to perform speaker adaptation in a parameter-efficient manner. |

|

Chaehun Shin*, Heeseung Kim*, Che Hyun Lee, Sang-gil Lee, Sungroh Yoon Asian Conference on Machine Learning (ACML), Best Paper Award, 2023 Project Page / arXiv Edit-A-Video is a diffusion-based one-shot video editing model that solves a background inconsistency problem using a new sparse-causal mask blending method. |

|

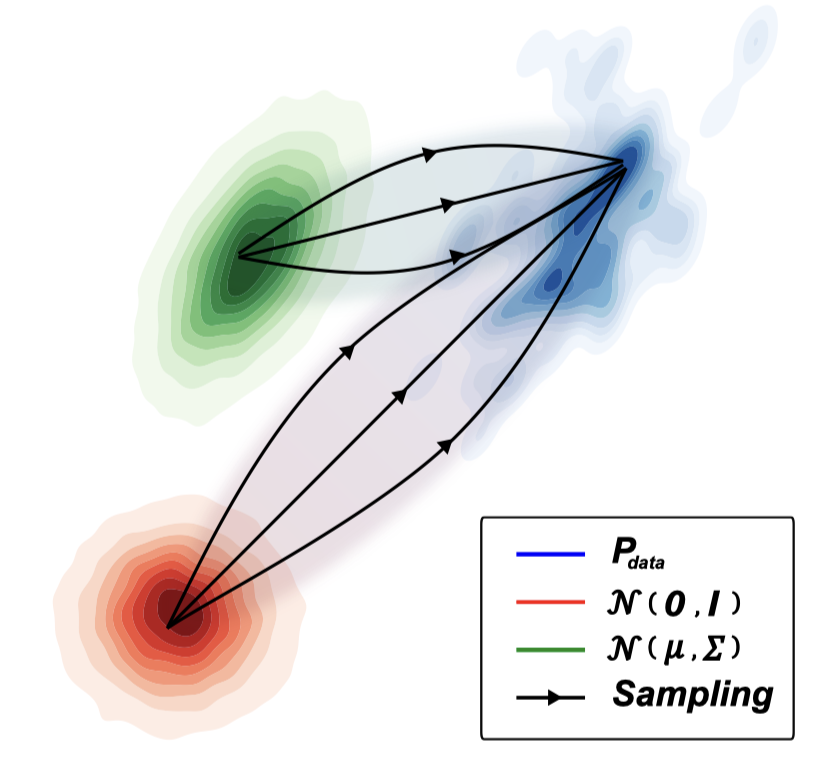

Sang-gil Lee, Heeseung Kim, Chaehun Shin, Xu Tan, Chang Liu, Qi Meng, Tao Qin, Wei Chen, Sungroh Yoon, Tie-Yan Liu International Conference on Learning Representations (ICLR), 2022 Project Page / arXiv / Code / Poster PriorGrad presents an efficient method for constructing a data-dependent non-standard Gaussian prior for training and sampling from diffusion models applied to speech synthesis. |

|

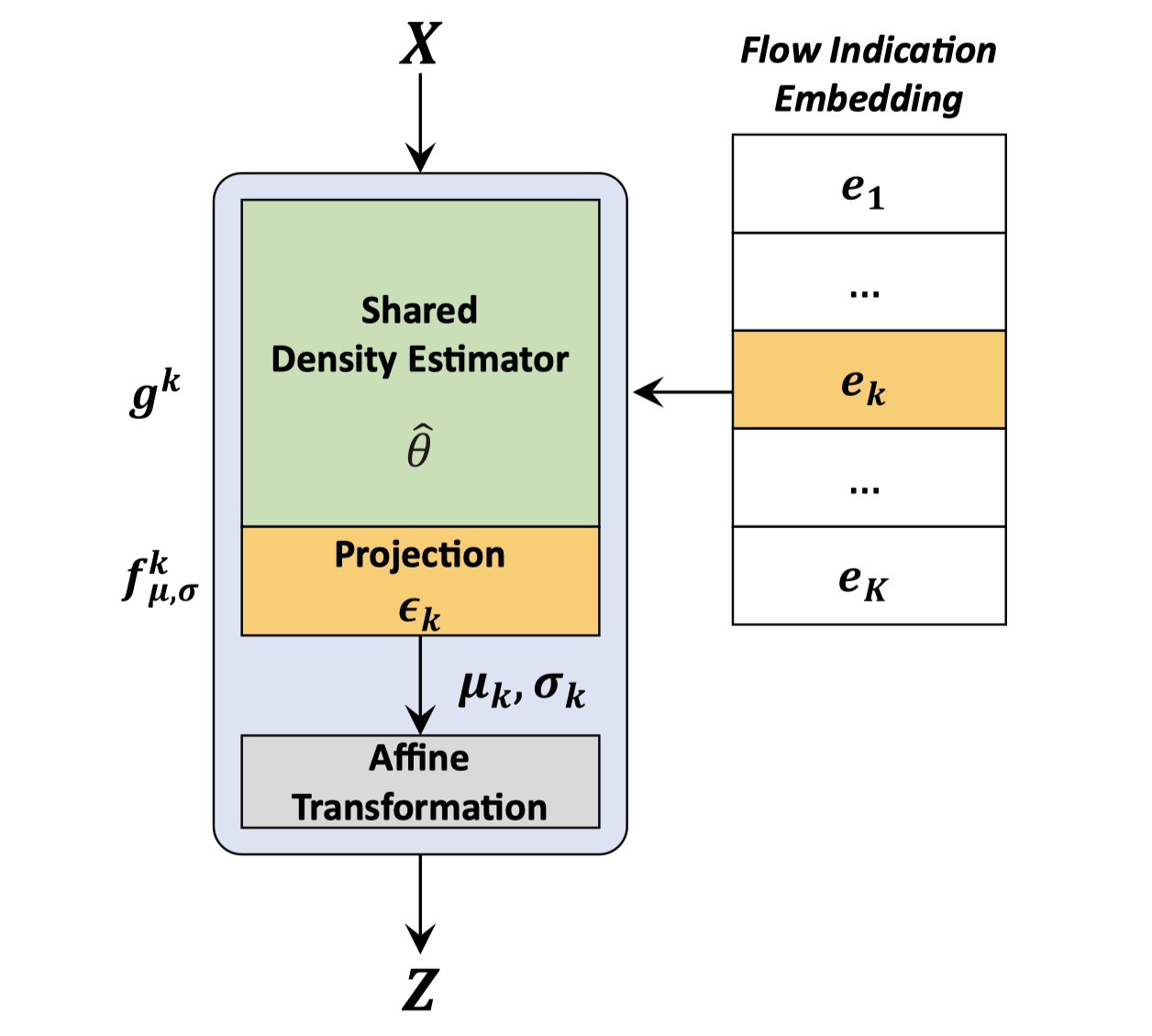

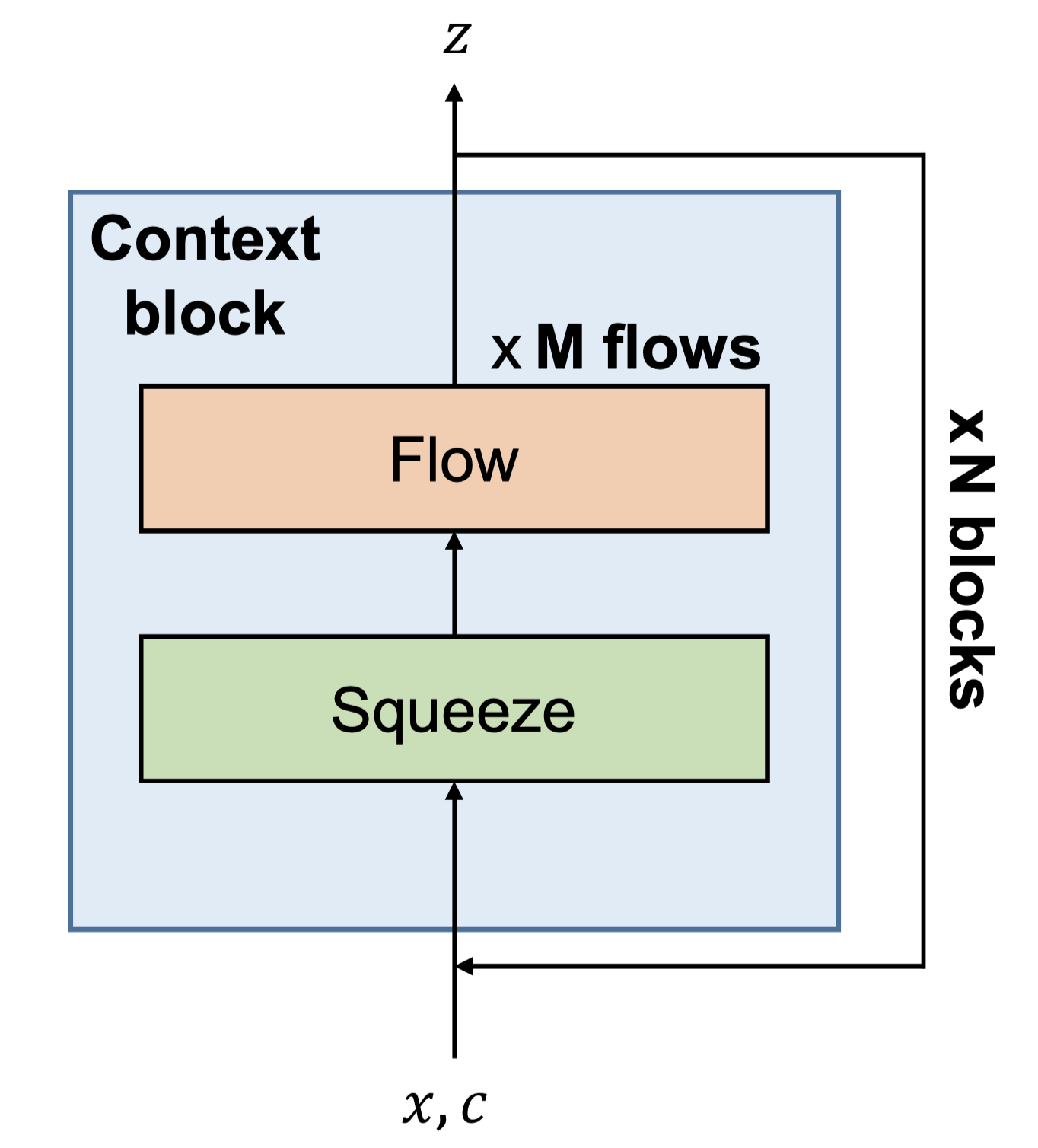

Sang-gil Lee, Sungwon Kim, Sungroh Yoon Neural Information Processing Systems (NeurIPS), 2020 arXiv / Code / Poster NanoFlow uses a single neural network for multiple transformation stages in normalizing flows, which provides an efficient compression for flow-based generative models. |

|

Sungwon Kim, Sang-gil Lee, Jongyoon Song, Jaehyeon Kim, Sungroh Yoon International Conference on Machine Learning (ICML), 2019 arXiv / Code / Demo / Poster FloWaveNet is one of the first flow-based generative models for fast and parallel synthesis of audio waveforms, enabling a likelihood-based neural vocoder without any auxiliary loss. |

|

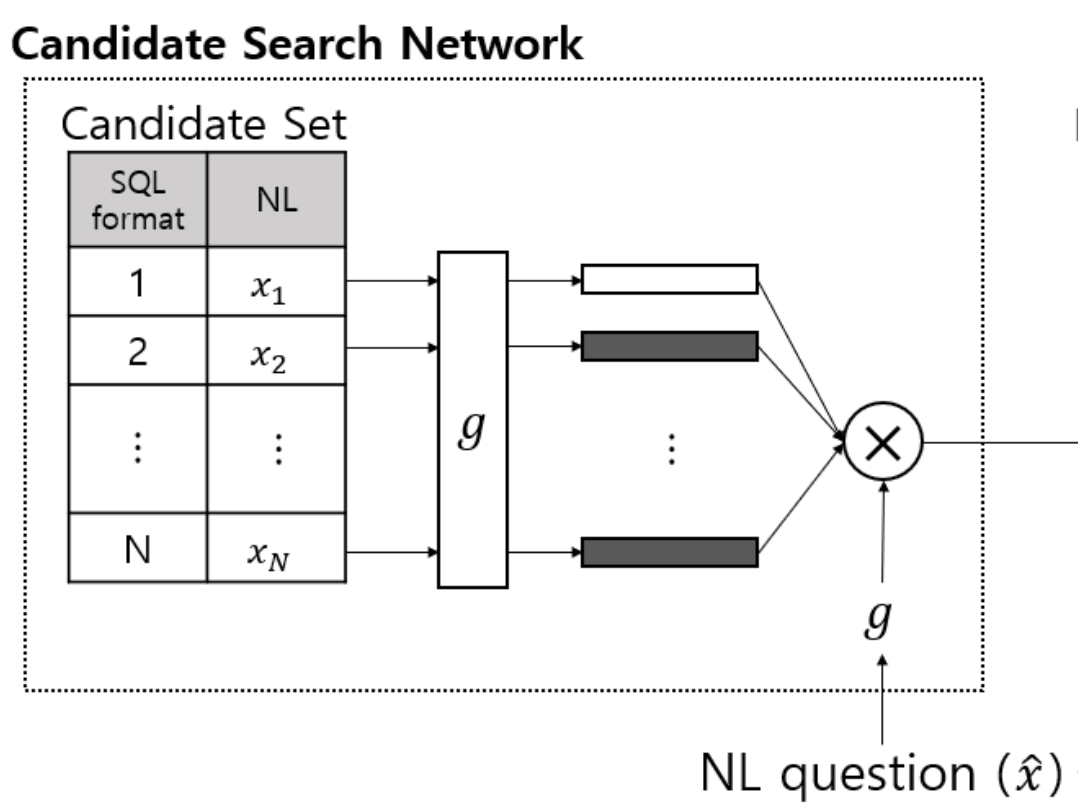

Dongjun Lee, Jaesik Yoon, Jongyoon Song, Sang-gil Lee, Sungroh Yoon arXiv preprint, 2019 arXiv Template-based one-shot text-to-SQL generative model based on a Candidate Search Network & Pointer Network. |

|

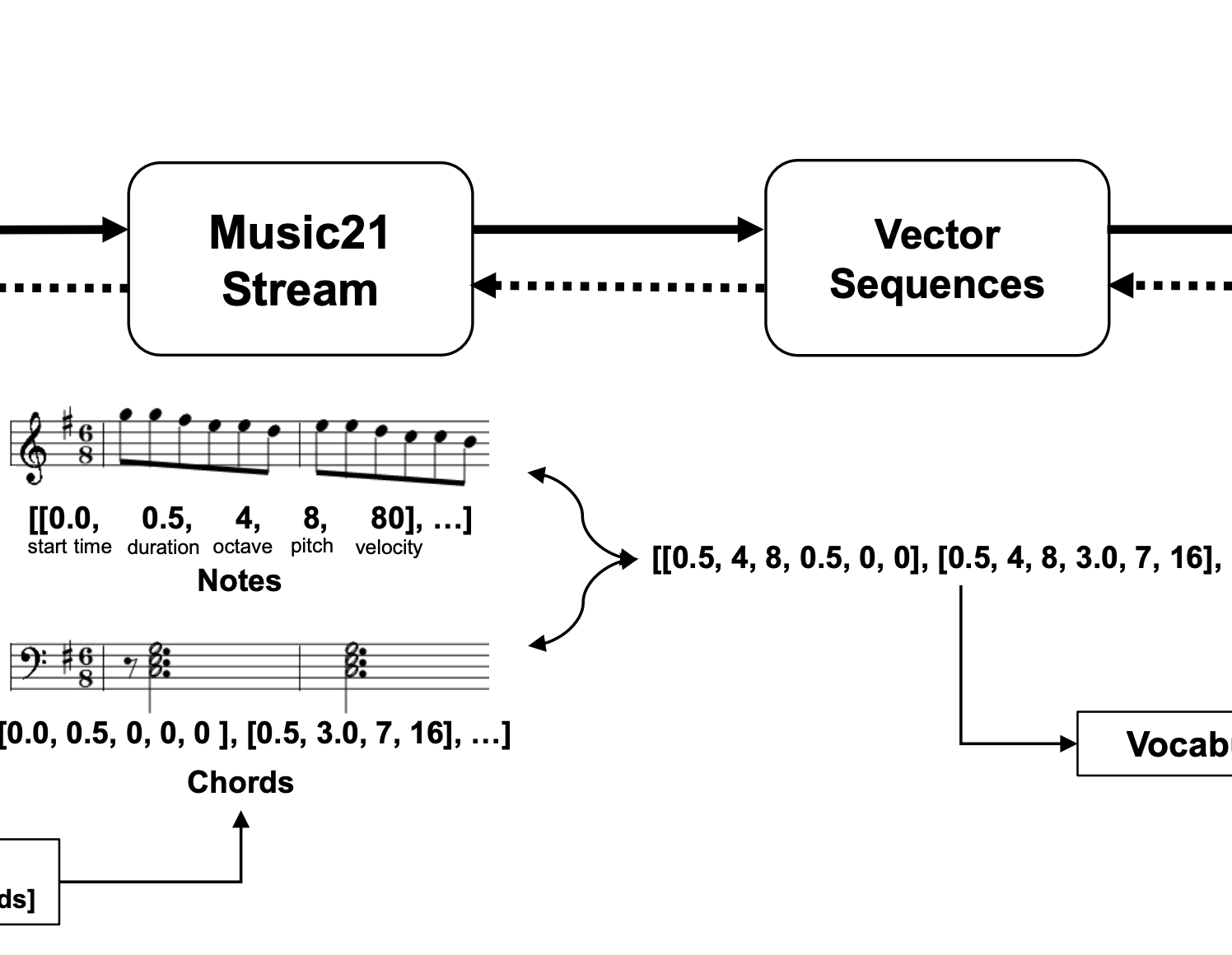

Sang-gil Lee, Uiwon Hwang, Seonwoo Min, Sungroh Yoon arXiv preprint, 2017 arXiv / Code This work investigates an efficient musical word representation from polyphonic MIDI data for SeqGAN, simultaneously capturing chords and melodies with dynamic timings. |

|

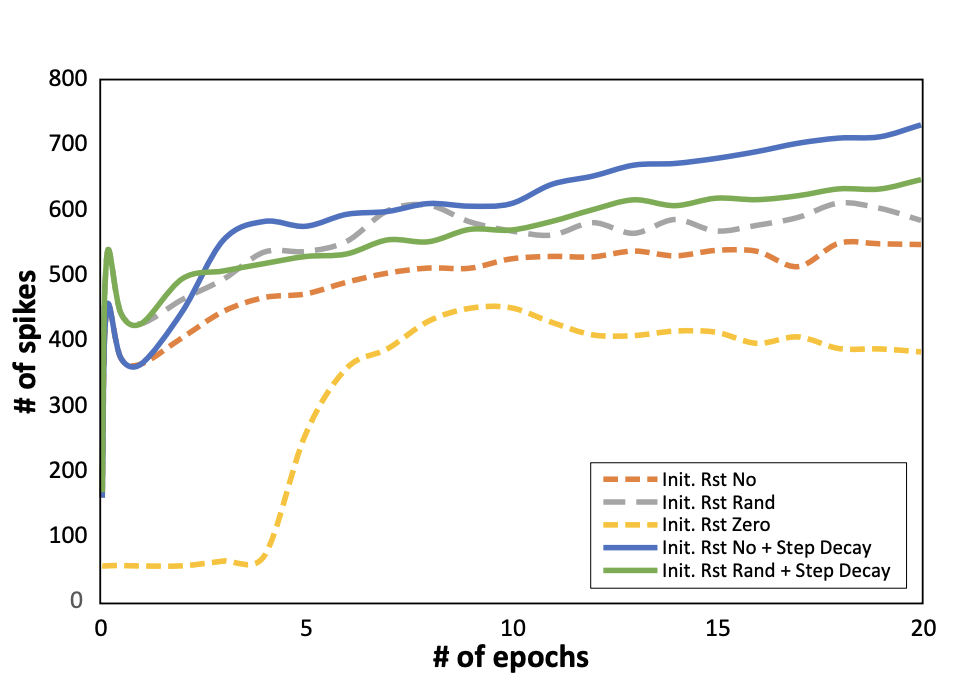

Seongsik Park, Sang-gil Lee, Hyunha Nam, Sungroh Yoon Neural Information Processing Systems (NIPS) Workshop on Computing with Spikes, 2016 arXiv Investigates various initialization and backward control schemes of the membrane potential for training deep spiking networks. |

|

|

|

Jan 2024 - Current In the Applied Deep Learning Research team, I am working on building multi-modal large language models with a focus on audio. Sep 2021 - Jan 2022 As a research intern, I worked on improving neural vocoders for high quality speech and audio synthesis, advised by Wei Ping and Boris Ginsburg. |

|

Feb 2023 - Jan 2024 I developed a framework for Text-to-Speech (TTS) research and development, optimized for deployment on edge devices. |

|

Dec 2020 - May 2021 I worked on diffusion-based generative models for speech synthesis, advised by Xu Tan, Chang Liu, Qi Meng, and Tao Qin. Dec 2018 - Feb 2019 I worked on the Antigen Map Project, where I applied sequence models to predict antigens from genetic sequences, advised by Bin Shao. |

|

Jul 2019 - Sep 2019 I worked on improving speech synthesis and voice conversion models, advised by Jaehyeon Kim and Jaekyong Bae. |

|

|

|

Electrical and Computer Engineering Sep 2016 - Feb 2023 Electrical and Computer Engineering / Applied Biology and Chemistry Mar 2010 - Aug 2016 |

|

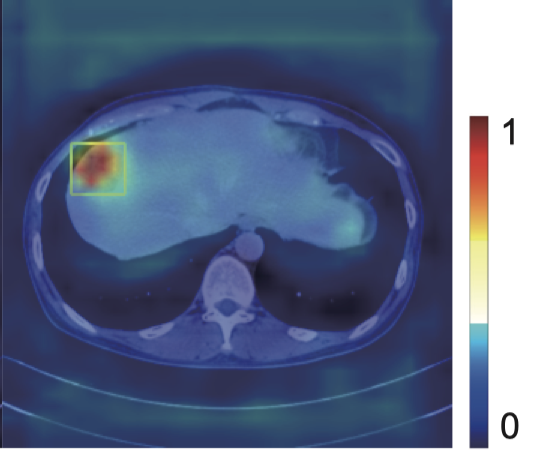

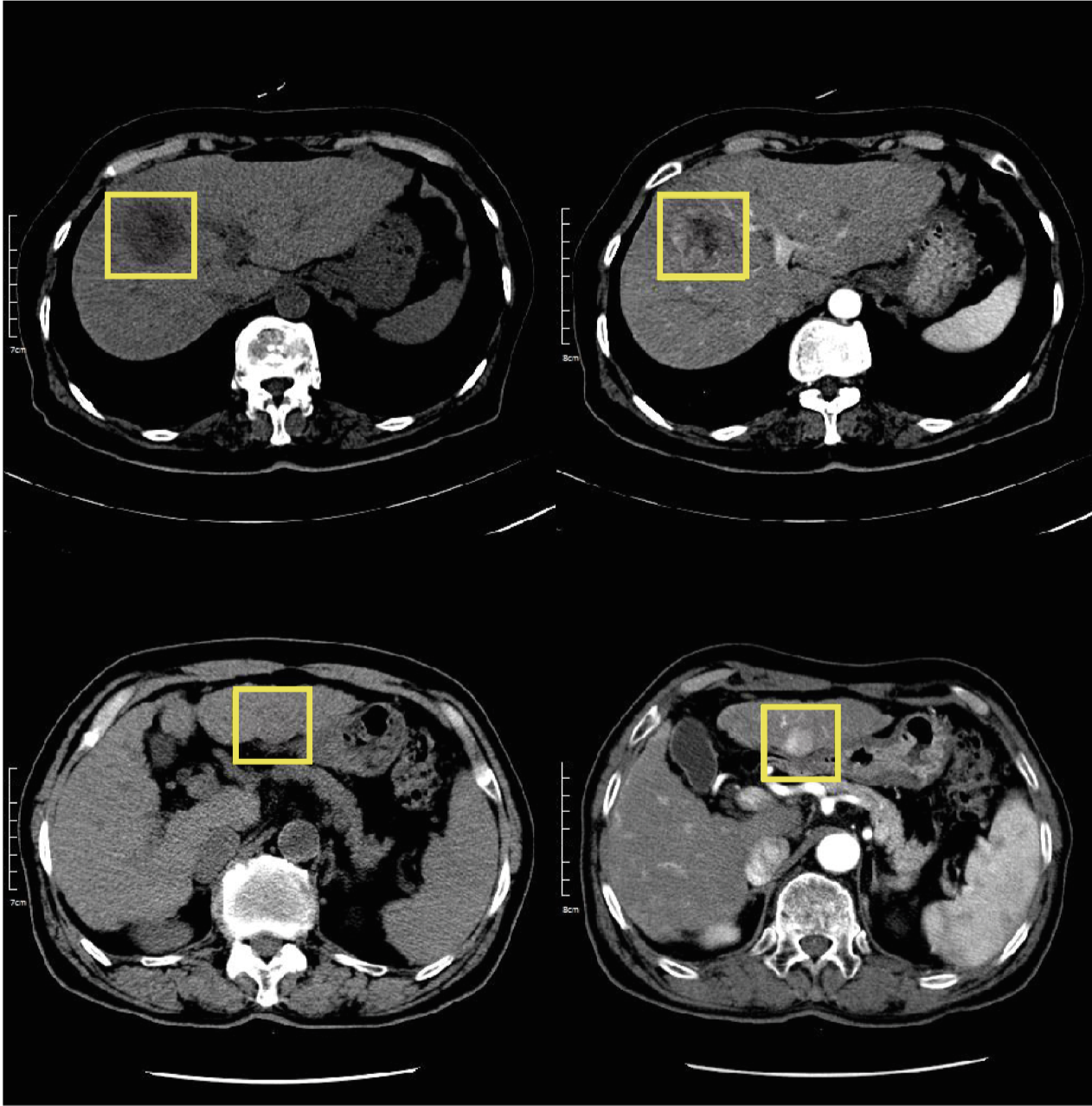

During my time at DSAIL, I collaborated with Seoul National University Hospital on a computer-aided diagnosis project for liver cancer. The project yielded a high-performance medical object detection model to help reduce human errors from radiologists for the early detection of liver disease. |

|

Sang-gil Lee*, Eunji Kim*, Jae Seok Bae*, Jung Hoon Kim, Sungroh Yoon IEEE Transactions on Emerging Topics in Computational Intelligence (TETCI), 2021 arXiv / Code GSSD++ provides robustness to unregistered multi-phase CT images for detecting liver lesions using attention-guided multi-phase alignment with deformable convolutions. |

|

Sang-gil Lee, Jae Seok Bae, Hyunjae Kim, Jung Hoon Kim, Sungroh Yoon International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), 2018 arXiv / Code GSSD pioneers a focal liver lesion detection model from multi-phase CT images, which reflects a real-world clinical practice of radiologists. |

|

|

|

|

|

I am a PC hardware enthusiast, always eager to learn about computers in my free time.

As a hobbyist DJ, I enjoy house music. My mixes on YouTube |

|

Last update: Jan 2025. Template borrowed from here. |